In the ever-evolving world of artificial intelligence, the Platypus 2 70B AI open source large language model (LLM) has emerged as a leader, currently holding the top spot in HuggingFace’s Open LLM Leaderboard. This achievement is a testament to the innovative research and development that has gone into the creation of this AI model.

The research team behind Platypus has introduced the Open-Platypus dataset, a carefully curated subset of other open datasets. This dataset, which is now available to the public, has been instrumental in the fine-tuning and merging of LoRA modules. This process has allowed the model to retain the strong prior of pretrained LLMs while emphasizing specific domain knowledge.

The researchers have been meticulous in their efforts to prevent test data leaks and contamination in the training data. This vigilance not only ensures the integrity of the model but also provides valuable insights for future research in the field. The Platypus models are a series of fine-tuned and merged variants based on the LLaMA and LLaMa-2 transformer architectures. Platypus takes advantage of LoRA and PEFT.

Platypus has demonstrated impressive performance in quantitative LLM metrics across various model sizes. Remarkably, it achieves this using less fine-tuning data and overall compute than other state-of-the-art fine-tuned LLMs. A 13B Platypus model can be trained on a single A100 GPU using 25k questions in just 5 hours, showcasing its efficiency.

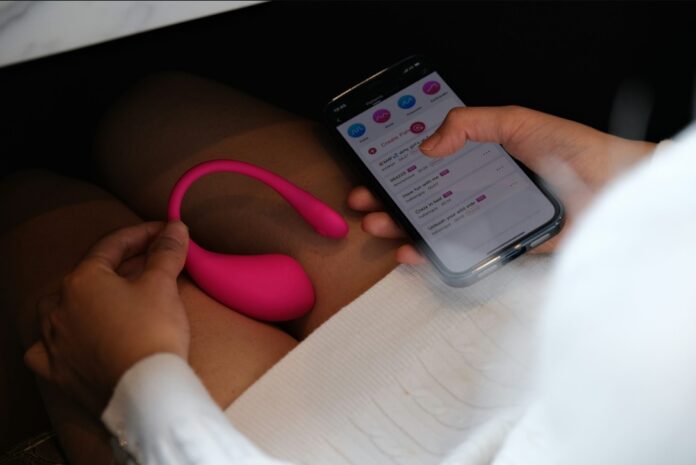

Platypus 2 AI LLM

The research has focused on optimizing LLMs using PEFT and LoRA with the Open-Platypus dataset. This dataset, derived from 11 open-source datasets, primarily aims to enhance LLMs’ proficiency in STEM and logic. The researchers have also detailed a similarity exclusion approach to minimize data redundancy and have conducted an in-depth exploration of the contamination issue in open LLM training sets.

Other articles you might find of interest on the subject of Llama :

The Platypus methodology has been designed to prevent benchmark test questions from leaking into the training set, thereby avoiding any bias in the results. The research uses Low Rank Approximation (LoRA) training and Parameter-Efficient Fine-Tuning (PEFT) library to cut down trainable parameters, which saves on both training time and cost.

As a fine-tuned extension of LLaMa-2, Platypus retains many of the foundational model’s constraints. However, it also introduces specific challenges due to its targeted training. While Platypus is enhanced for STEM and logic in English, its proficiency in other languages is not guaranteed and can be inconsistent.

The developers have issued a cautionary note, advising that safety testing tailored to specific applications should be conducted before deploying Platypus. This is due to the potential misuse of the model for malicious activities. Users are also advised to ensure no overlap between Platypus’s training data and other benchmark test sets to avoid data contamination.

The Platypus 2 70B AI open source large language model is a significant advancement in the field of AI, demonstrating impressive performance and efficiency. However, its use requires careful consideration and testing to ensure optimal results and to prevent misuse. for more information on the Platypus 2 AI open source large language model jump over to the official website for more details and download links to the papers, models, dataset and code.

Filed Under: Guides, Top News

Latest Aboutworldnews Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Aboutworldnews may earn an affiliate commission. Learn about our Disclosure Policy.