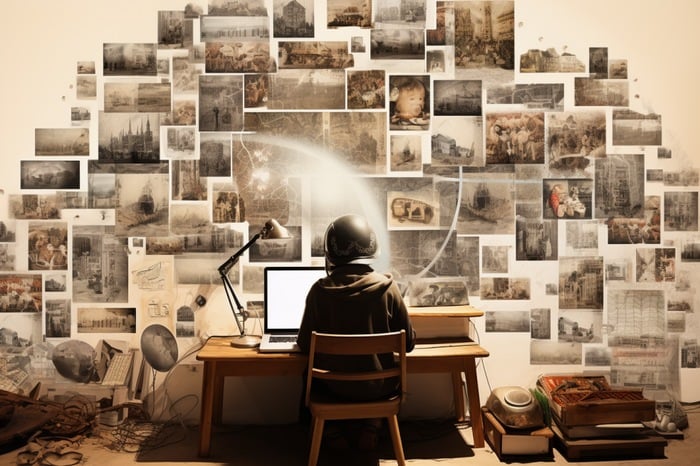

OpenAI’s latest innovation, the ChatGPT-4 Vision model, is a groundbreaking tool that has the capability to analyze images. This new feature allows users to upload an image and ask questions about it, with the AI model analyzing the image and responding accordingly. The applications of this technology are vast, ranging from language translation to solving mechanical issues, analyzing data and graphs, and even solving math problems or puzzles.

One of the most impressive features of OpenAI’s latest technology to its ChatGPT AI model is its ability to analyze and describe photographs, providing detailed descriptions, recognizing and describing objects and even people within them. However, it’s important to note that while it can recognize specific individuals, it won’t speculate on personal characteristics or make subjective judgments. Furthermore, it is programmed not to identify real people based on images, ensuring privacy and ethical considerations are upheld.

The AI model’s ability to recognize and describe objects and people in images is not limited to static objects or faces. It can also analyze and understand the humor in memes, adding a new dimension to its capabilities. This feature could be particularly useful in social media monitoring or digital marketing, where understanding the context and humor of memes is crucial.

Using OpenAI ChatGPT Vision to analyze images

Another useful feature of GPT-4 Vision is its ability to translate text in images. This could be particularly important for users who come across text in a foreign language that they don’t understand. By simply taking a photograph with your phone and uploading it to ChatGPT, allows the AI model can translate it, breaking down language barriers and making information more accessible.

Other articles you may find of interest on the subject of OpenAI’s ChatGPT AI model and its capabilities :

ChatGPT-4 Vision also has practical applications in the kitchen. It can suggest meals based on images of food items in a fridge. By analyzing the contents of a fridge, it can generate detailed recipes, helping users to make the most of the ingredients they have on hand. This feature could be a game-changer for those who struggle with meal planning or want to reduce food waste.

The capabilities of ChatGPT-4 Vision extend to working in conjunction with DallE 3, another AI model. It can provide feedback on images generated by DallE 3 and suggest improvements, creating a synergistic relationship between the two AI models. This could lead to better results over time, as the AI models learn from each other and improve their capabilities. OpenAI explain a little more about the GPT-4V(ision) system card.

GPT-4V(ision)

“GPT-4 with vision (GPT-4V) enables users to instruct GPT-4 to analyze image inputs provided by the user, and is the latest capability we are making broadly available. Incorporating additional modalities (such as image inputs) into large language models (LLMs) is viewed by some as a key frontier in artificial intelligence research and development.

Multimodal LLMs offer the possibility of expanding the impact of language-only systems with novel interfaces and capabilities, enabling them to solve new tasks and provide novel experiences for their users. In this system card, we analyze the safety properties of GPT-4V. Our work on safety for GPT-4V builds on the work done for GPT-4 and here we dive deeper into the evaluations, preparation, and mitigation work done specifically for image inputs.”

Despite its impressive capabilities, it’s important to note that GPT-4 Vision is designed with privacy in mind. It can’t store, remember, or access any past images, ensuring that users’ data is not compromised. It can provide general descriptions about visual attributes of people, but it will not identify who the person might be, maintaining a respectful distance from personal identification.

OpenAI’s ChatGPT-4 Vision model is a powerful tool that can analyze images in a variety of ways. Whether it’s translating text in images, suggesting meals based on fridge contents, understanding humor in memes, or providing feedback on images generated by DallE 3, the applications of this technology are vast. As it continues to be rolled out to subscribers, it’s clear that this AI model has the potential to revolutionize the way we interact with images.

Filed Under: Guides, Top News

Latest aboutworldnews Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, aboutworldnews may earn an affiliate commission. Learn about our Disclosure Policy.