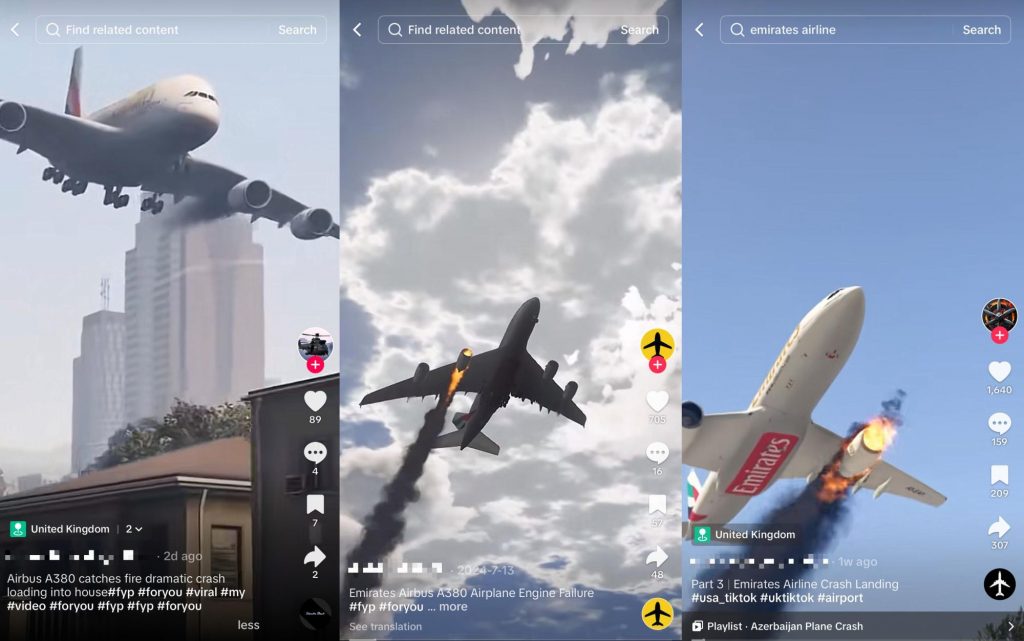

Middle Eastern carrier, Emirates, has issued a statement to debunk a video circulating online depicting a crash of one of their aircraft. While fake or simulated crash videos are not new, it appears that the purported video was convincing enough to cause enough alarm and had forced the airline to issue an official statement.

Social Media platforms slow to take action on fake Emirates crash video

As posted on X, Emirates said it was aware of the video and it confirms that the video is fabricated content and untrue. The airline says it had attempted to get various social media platforms to remove the video or make clear that it is digitally created footage to “avoid false and alarming information” from circulation. However, the responses from these platforms were not quick enough which necessitated the official statement to clear the air.

Emirates emphasised that safety is core to their brand and operations and they regard such matters with utmost seriously. They urged all audiences to always check and refer to official source.

We are aware of a video circulating on social media depicting an Emirates plane crash. Emirates confirms it is fabricated content and untrue.

We are in contact with the various social media platforms to remove the video or make clear that it is digitally created footage to avoid…

— Emirates (@emirates) January 4, 2025

The airline didn’t name the social media platform or provide any reference to the video or the aircraft involved in their statement. A quick check reveals that there are several simulated plane crash videos of Emirates Airbus A380 and Boeing B777 shared on TikTok.

Although TikTok has its own measure to ensure online safety and to tackle threats of AI generated content, most of these crash videos do not come with a warning.

TikTok does offer creators the option to declare if a video is AI-generated content and sometimes they do auto label videos if the system could detect that it is AI generated.

However, from the looks of it, it’s not an easy task to tell if a video is indeed AI generated or not. There are plenty of deepfake videos online and the scary part is that AI-generated videos are just going to get even more realistic over time and it can be misused for nefarious intentions.

Dangers of AI-generated content and lack of moderation

Going back to the Emirates situation, the challenge is how do we get these social media platforms to act quickly to tackle AI-generated misinformation before it cause panic, civil unrest and threat to public safety.

Malaysia recently enforced its social media and instant messaging regulation which aims to ensure online safety and hold platforms accountable to take action against scams, sexual crimes against children and various online harms. Unfortunately, there’s no clear timeframe given to these platforms to take down harmful content once it has been reported. As a comparison, Indonesia requires its digital licensees to comply with urgent take down requests within 4 hours.

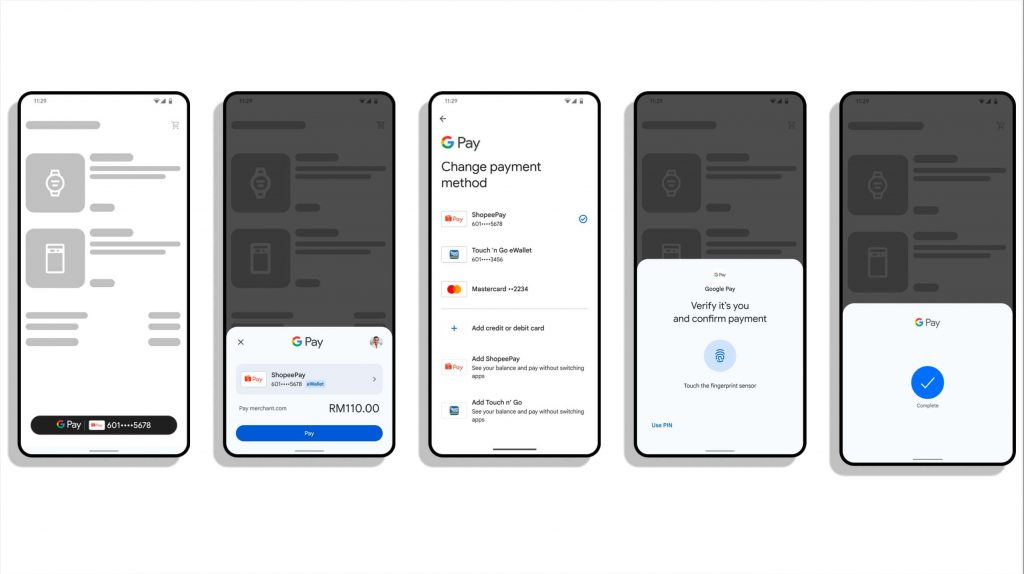

So far only two out of 8 platforms have obtained the required licence, while Google and X have yet to submit their application.

As we’ve reported extensively, some platforms are notorious of allowing scammers to run scam ads and they have shown lack of urgency in removing them. If a company that makes billions of dollars of revenue from advertising couldn’t even commit to basic checks on advertisers, how can we depend on them to weed out harmful AI-generated content?