Recent trends have shown a sharp rise in online harm on social media platforms which include online scams, and scam ads impersonating public figures and popular Malaysian brands. In addition, there are also growing concerns about sexual crimes against children and whether social media platforms are doing enough to ensure their platform is safe for all users.

Ahead of Malaysia’s new social media platform regulation, TikTok invited us to their Transparency and Accountability Centre (TAC) in Singapore, to show us how content is moderated and to ensure it is safe for everyone including children.

TikTok Transparency and Accountability Centre, Singapore

TikTok’s TAC in Singapore is the first in the region which provides visitors the opportunity to see content is vetted and recommended to its viewers. While the platform is known for entertainment and short videos, the social media platform emphasises safety, well-being and integrity.

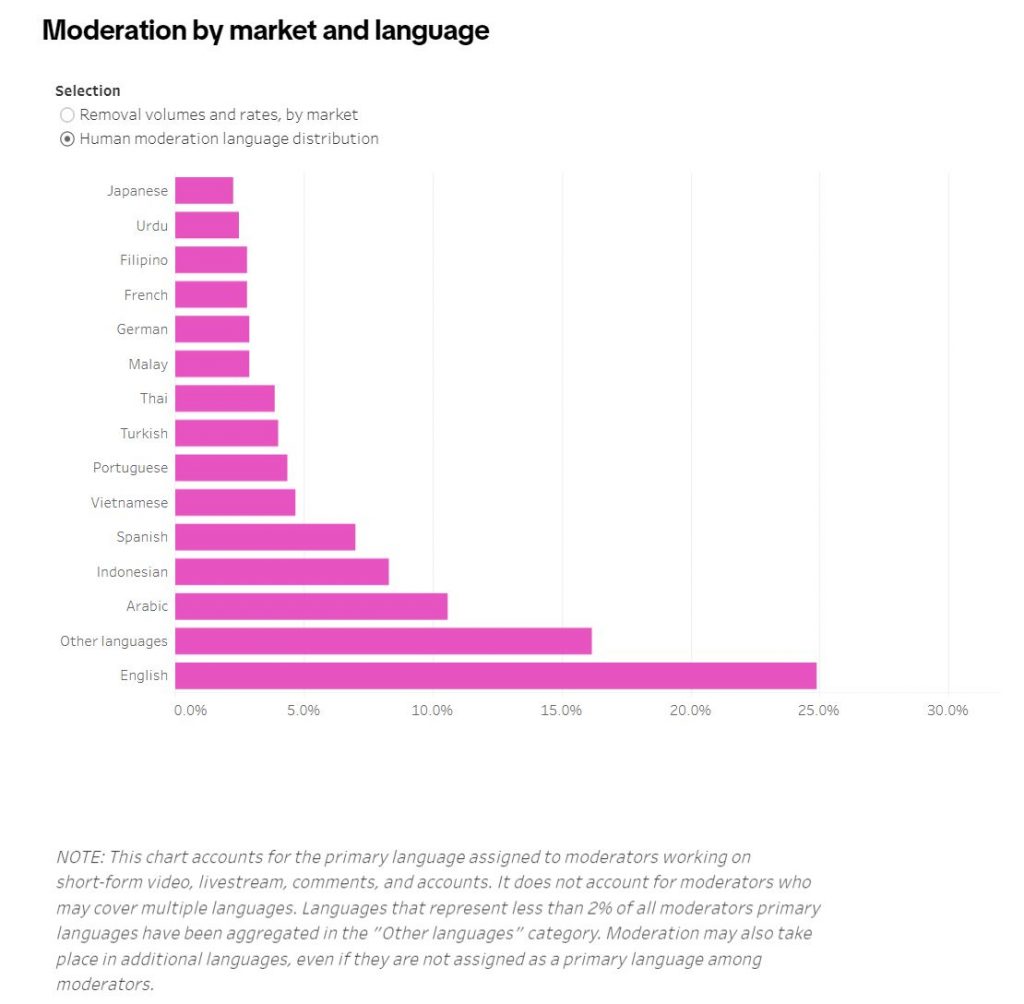

To keep its platform safe, TikTok currently has more than 40,000 moderators worldwide and they manage content in more than 70 languages. These content moderators are scattered across different markets with specific teams that understand the local and legal context of different countries.

While TikTok doesn’t share specific numbers of moderators in Malaysia, their transparency report has indicated a sizeable distribution of Asian languages in their team. 8.3% of their human moderators are assigned to Indonesian, 4.7% to Vietnamese, 3.9% to Thai, 2.9% to Malay, 2.8% to Filipino and 2.3% to Japanese languages.

Based on the assumption that TikTok has 40,000 human moderators, that’s approximately 3,320 moderators to handle the Indonesian language and 1,160 moderators exclusively for the Malay language.

In the name of transparency, TikTok has also published its quarterly community guidelines enforcement report and bi-annual government removal requests report. These reports provide a clearer view of how TikTok enforces its community guidelines, terms of service and advertising policies, as well as the handling takedown requests from governments.

Using Machine and Human Technology to Moderate Content

Before a video appears on the For Your Feed (FYF), each uploaded content goes through TikTok’s automated moderation technology which uses various signals across content covering visual, audio, titles, descriptions and keywords. If the system is certain that the content has violated their community guidelines, it will be removed automatically.

However, in some instances where the degree of violation isn’t clear-cut, the automated moderation system will pass on the content to human moderators for further action.

According to TikTok, 0.9% of all videos uploaded were removed globally and 97.7% of the removals were done proactively. As a result, a bulk of inappropriate content is removed before it gets reported.

In Malaysia specifically, 1,249,806 videos were removed between January and March 2024 and 98.4% of them were removed proactively by the platform. According to TikTok, 79.0% of these videos were removed within 24 hours.

If the content is wrongly flagged for violation, users can file an appeal which will then send back the content in question to moderators to decide if it should be allowed back to the platform.

TikTok’s community guidelines have been updated regularly and it is made public to help users create and share safety. The recent update made on May 17 enhances clarity on hate speech and misinformation.

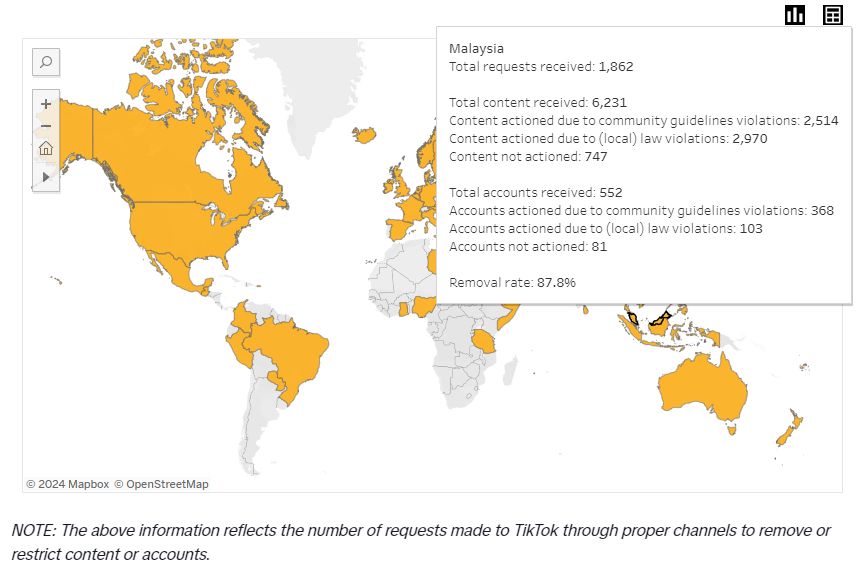

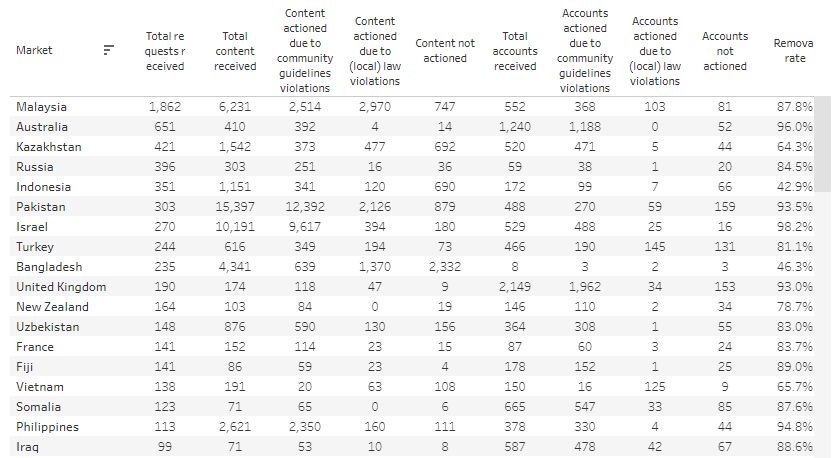

88% of Malaysia govt takedown requests violated TikTok guidelines and local law

It has been widely reported that Malaysia has the highest number of government takedown requests on TikTok. According to TikTok’s H2 2023 report, they have received a total of 1,862 requests from the government to take down a total of 6,231 content and 552 accounts.

For content, 2,514 (40.35%) have been removed as they were found to have violated TikTok’s community guidelines, and 2,970 (47.66%) were removed due to local law violations. The remaining 747 (12%) were not removed.

For reported accounts, TikTok has removed 368 accounts (66.67%) which were found to have violated community guidelines and 103 (18.66%) were removed due to local law violations. The remaining 81 accounts were not actioned.

It is worth pointing out that TikTok will not remove content and accounts if no violations are found and the removal rate for government requests is 87.8% for Malaysia. As a comparison, the content removal rate in Australia is 96.0% while in Indonesia, it is 42.9%.

When it comes to government takedown requests, TikTok relies on its community guidelines as the baseline to balance harm versus free speech. However, sometimes they do receive takedown requests for content that doesn’t violate their community guidelines but their terms of service clearly state that TikTok is respectful of local laws. In such instances, their legal team will assess the request against local laws to determine if the content has violated any laws and TikTok may take necessary action including restricting the content for that region.

Safe environment for younger users

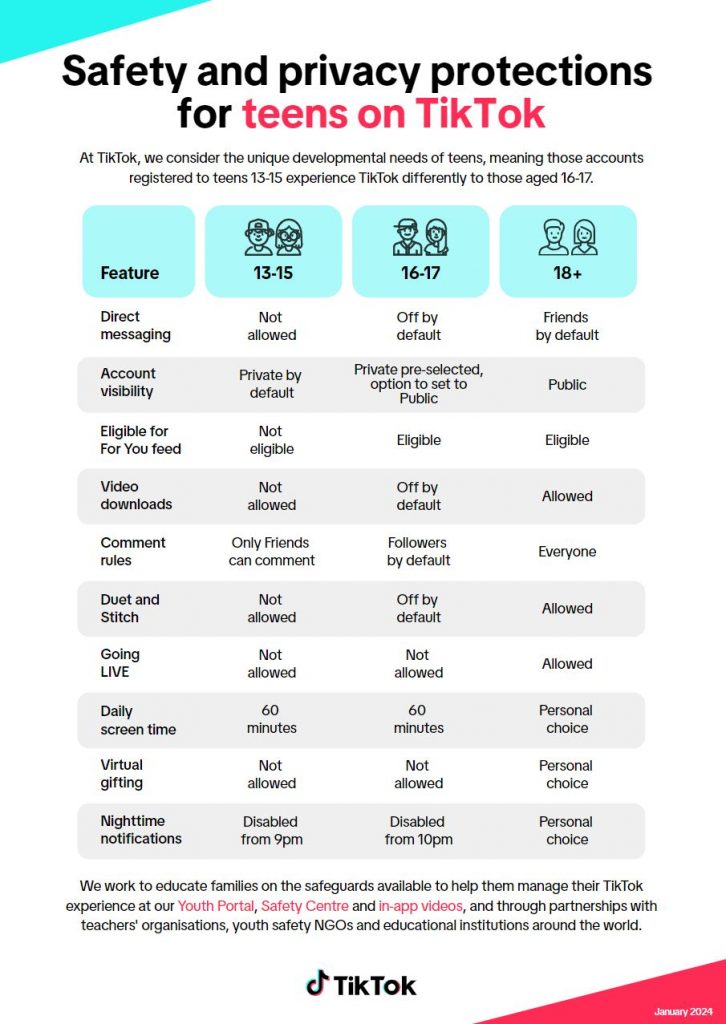

A growing concern is sexual crimes against children as younger users are groomed by online predators. To ensure a safe and trusted environment for young users, TikTok has implemented several measures to minimise such harms.

TikTok is only allowing teenagers aged 13 years and above to register as a user on its platform. While younger children may find ways to circumvent age checks on their platform, they have algorithms in place to remove accounts and content which are made by an underaged user. In some countries such as South Korea and Indonesia, the minimum age is 14 years old.

For users below the age of 16 years old, TikTok has implemented several safeguards which include disabling direct messages and setting their account visibility to private by default. As an added measure, content uploaded by users below 16 will not show up on the FYF and only friends are allowed to leave comments.

On top of that, they have also disabled duet and stitch, as well as disabled downloads for their videos. These measures were designed to prevent exposure to potential online predators.

Once they reach the age of 16 to 17 years old, these younger users will then be given the option to turn on more features which are originally set as disabled by default. This includes enabling direct messaging, comments, making videos public and allowing videos to appear on the FYF. TikTok also doesn’t allow users below 18 years old to go live on their platform.

For optimal digital well-being, TikTok has also implemented default limits of 60 minutes for daily screen time for users below 18 years old. It also restricts notifications at night as early as 9pm for users below 16 years old.

Triple-layer moderation to prevent scam ads on TikTok

One of the major issues plaguing the social media scene in Malaysia is the rise of scams and online gambling ads. Several platforms have notoriously allowed their platforms to be misused to amplify scams despite these ads violating their advertising policy and local laws.

According to TikTok, they have 3 layers for moderation when it comes to ads. All ads are moderated for community guidelines, advertising policy and creative policies. Before an account is allowed to start a campaign, there’s an onboarding process where more information is required to prove that the advertiser is in good standing before they can access the ad manager.

In addition to safe content, TikTok also has measures to ensure brand safety for advertisers. By design, the algorithm ensures that the content that appears before and after the ad placement is suitable for the promoted content.

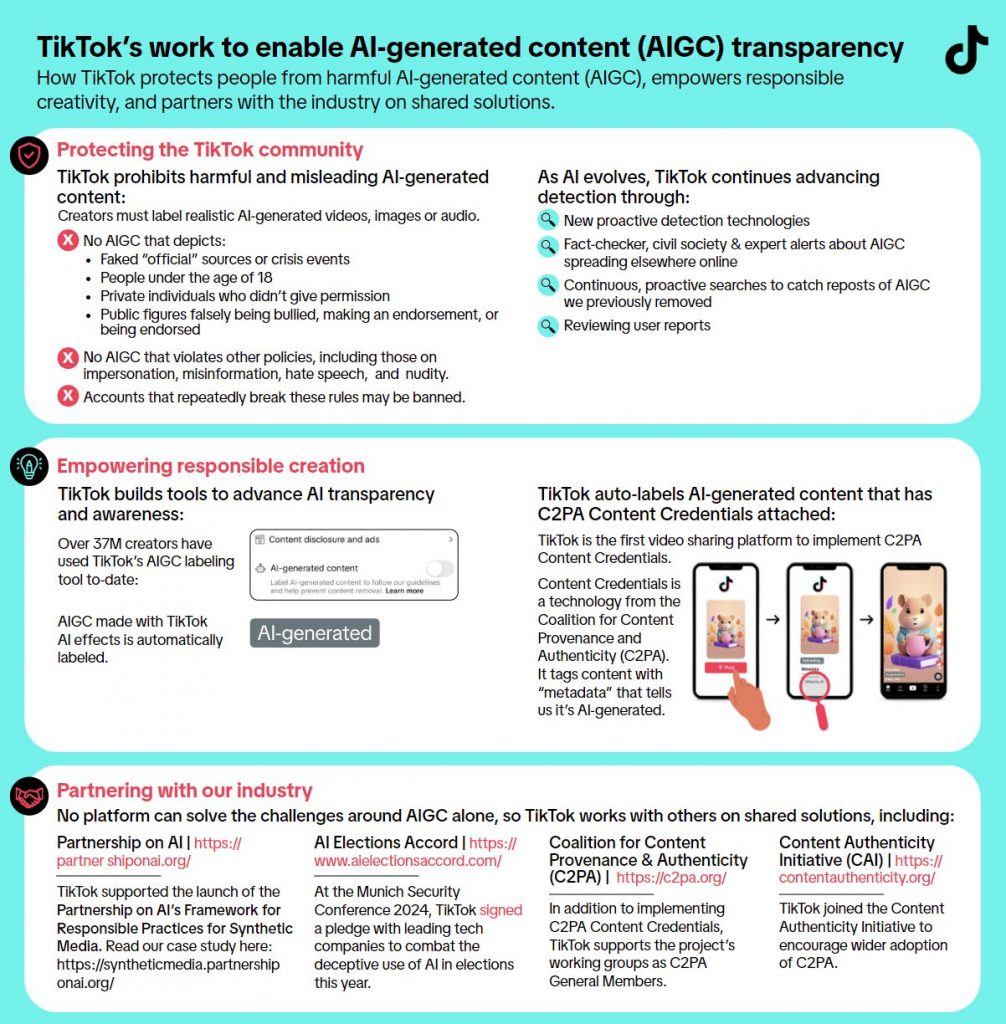

Protection against harmful and misleading AI-generated content

With the rise of Generative AI, it opens up a lot of opportunities for creativity but it also raises concerns about harmful AI-generated Content (AGC). To ensure the responsible use of generative AI content, TikTok aims to protect users by removing harmful and misleading AGC and requiring the labelling of realistic AGC. It also wants to empower users by enhancing transparency and education about the use of AGC as well as collaborating with industry peers and experts on the handling of AGC.

For starters, TikTok doesn’t allow AGC that depict fake authoritative sources or crisis events. The platform also prohibits AGC of any real or fictional persons on its platform which include public figures for political and commercial endorsement as well as private figures including people below 18 years old.

For creators who intend to share AGC, they are urged to turn on the AI-generated content setting which will enable the video to be labeled as AI-generated. It is said that TikTok is the first video-sharing platform to implement C2PA Content Credentials to tag AI-generated content into its metadata.

TikTok is aware that they need to catch up with the challenges of AGC and they aim to launch an AI media literacy campaign soon.